Biography

I am a fifth year Ph.D. student at Tsinghua University, advised by Prof. Gao Huang and Prof. Cheng Wu. My research interests lie in the model architecuture design, graph neural network and 3D computer vision.

I’m on job market now! If you are interested in me, contact me via Email.

Download my resumé (CN/EN).

- Machine Learning

- Computer Vision

-

Ph.D. in Automation, 2018-Present

Tsinghua University

-

BSc in Automation, 2014-2018

Tsinghua University

Recent News

Selected Publications

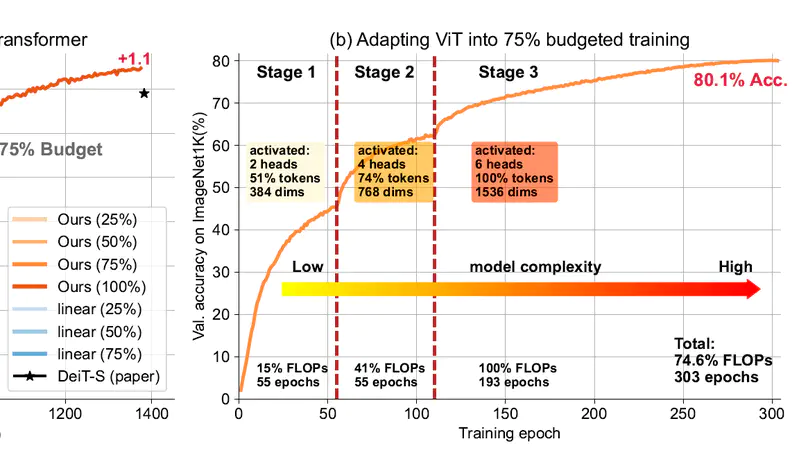

In this paper, we address the high training cost problem of Vision Transformers by proposing a framework that enables the training process under any training budget from the perspective of model structure, while achieving competitive model performances.

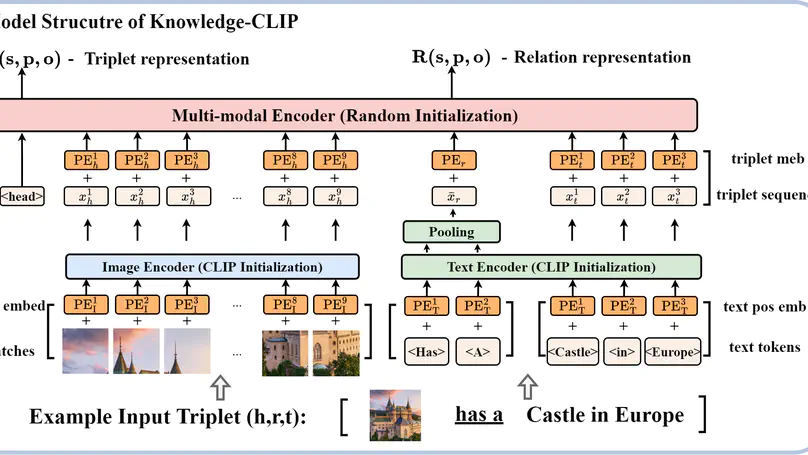

In this paper, we propose a knowledge-based pre-training framework, dubbed Knowledge-CLIP, that injects semantic information into the widely used CLIP model.

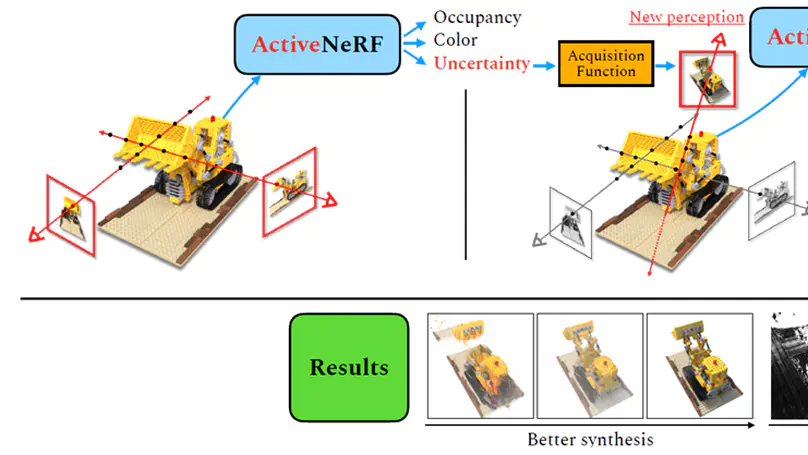

We present a novel learning framework, ActiveNeRF, aiming to model a 3D scene with a constrained input budget. We first incorporate uncertainty estimation into a NeRF model, which ensures robustness under few observations and provides an interpretation of how NeRF understands the scene. On this basis, we propose to supplement the existing training set with newly captured samples based on an active learning scheme. By evaluating the reduction of uncertainty given new inputs, we select the samples that bring the most information gain. In this way, the quality of novel view synthesis can be improved with minimal additional resources.

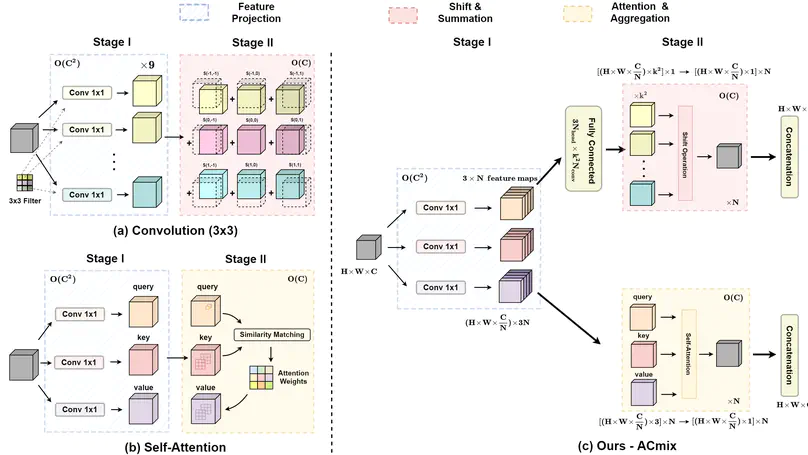

In this paper, we show that there exists a strong underlying relation between them, in the sense that the bulk of computations of these two paradigms are in fact done with the same operation. This observation naturally leads to an elegant integration of these two seemingly distinct paradigms, i.e., a mixed model that enjoys the benefit of both self-Attention and Convolution (ACmix), while having minimum computational overhead compared to the pure convolution or self-attention counterpart.

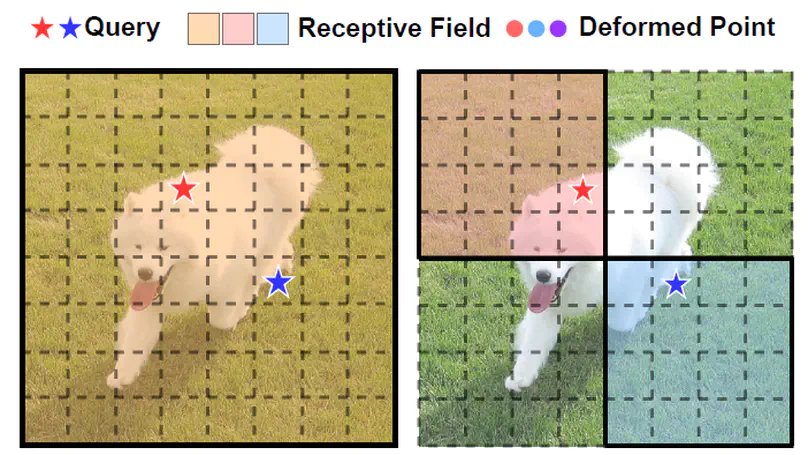

In this paper, we present Deformable Attention Transformer, a general backbone model with deformable attention for both image classification and dense prediction tasks.

Publications

Activities

Contact

- pxr18@mails.tsinghua.edu.cn

- Room 616, Central Main building, Tsinghua University, Beijing 100084